Every important business activity is measured — financial, inventory, headcount, payroll and client engagement. The learning function is not immune; chief learning officers are often asked to measure learning impact. But between 2010 and 2015, more CLOs were dissatisfied than satisfied with the tools, resources or data available to them to do so.

As organizations increasingly use analytics as a business decision-making tool, 3 out of 5 CLOs report that their divisional leaders and line managers regularly use learning and development measurement data, but only half of C-suite executives review such data quarterly or monthly, according to IDC’s survey of the Chief Learning Officer Business Intelligence Board.

While learning and development measurement seemed to be behind other areas for the past five or so years, this year more than 60 percent of CLOs report their measurement programs are “fully aligned” with their learning strategy. Further, this is the first survey since 2010 where more CLOs are satisfied with their organizational approach to measurement than dissatisfied.

Taking Measures

Learning professionals generally agree on the value of measurement. When done properly, it can demonstrate learning’s effect to the company’s top and bottom lines. Effective measurement also ensures that organizational initiatives will more frequently include appropriate learning components. This helps the learning organization increase its relevance.

In enterprises overall, measurement collection and reporting can be described as basic but improving. Almost 4 out of 5 enterprises use general learning output data — courses, students, hours of training, etc. — to help justify learning impact. Measuring satisfaction with learning programs is used as justification in 7 out of 10 organizations. At a more complex level, more than half report learning output aligned with specific learning initiatives. Even more advanced application of measurement such as employee engagement and performance are being measured by less than half of organizations.

Business impact and return on investment measures are often constrained by time, resources and the lack of a solid measurement structure, and they are measured by a third or fewer enterprises. Key metrics may include employee performance, customer satisfaction, improved sales numbers and more. The challenge remains gaining access to reliable metrics and finding the time and resources to conduct meaningful analysis.

In 2008, a majority of enterprises reported a high level of dissatisfaction with learning measurement. By 2010, that feeling had moderated substantially as analytics became mainstream in other areas of the business. But CLOs’ dissatisfaction remained high, thanks to higher expectations and continuing challenges with resources and leader support. In the past two years, satisfaction has increased substantially (Figure 1).

Perception and Impact

There appears to be a strong correlation between effective measurement and the perception of impact. When measurement programs are weak, most CLOs report that their influence and role in helping to achieve organizational priorities is also weak. When organizations are very satisfied with the measurement approach, they also believe learning and development plays a more important role in achieving organizational priorities.

As in prior years, the common forces working against satisfaction with measurement programs remained a combination of capability and support. CLOs report frustration that measurement is “not a priority in the organization.” This is often because there is a “lack of data or access to data” or “a lack of leadership support.” But even when the organization does perform measurement activities, some remain “focused on efficiency measures rather than effectiveness.”

Resources and leadership support are obviously essential to develop an effective measurement program. But getting that support remains a challenge. However, when the organization is aligned, CLOs report interesting and effective programs. For instance, one organization measures “the difference in profitability per employee between offices and the number of internally developed, job-specific courses completed” and it found a “dramatic difference in profitability between offices where they had taken [only a] few courses and those that had higher completion rates.”

Because of the various forces holding measurement use back, there has been little change in related processes. Consistent with past results, about 80 percent of companies do some form of measurement. About one-third of organizations use a manual process, and the rest use some form of automated system.

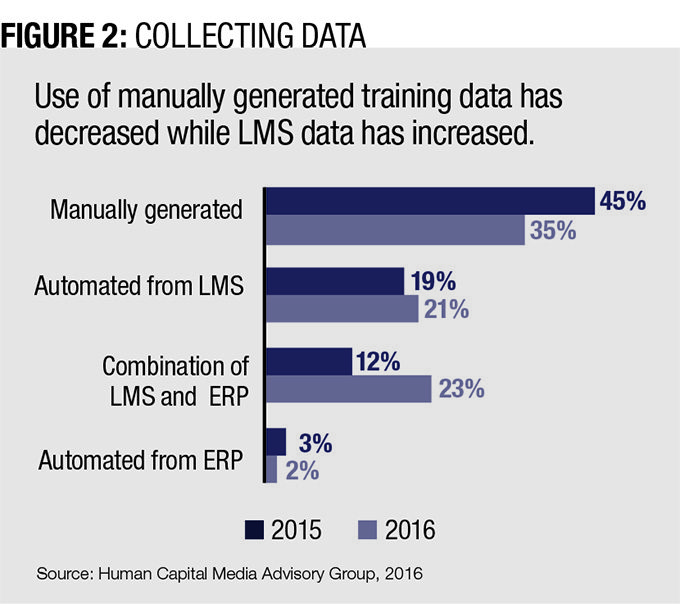

The mix of collection approaches is very similar to how it was performed as far back as 2008. There has been a slight decrease in the percentage of respondents who use a manual process and an increase in the percentage of enterprises that predominantly use LMS data.

CLOs can only use the collection tools they have, but systems used for data collection seem to correlate to the degree of satisfaction. Using learning management system data plus data from an enterprise resource planning system, or only using LMS data results in relatively high satisfaction (Figure 2). Other research shows ERP data alone is not specific enough to appropriately evaluate learning impact. But LMS data alone is also insufficient.

Learning and Outcomes

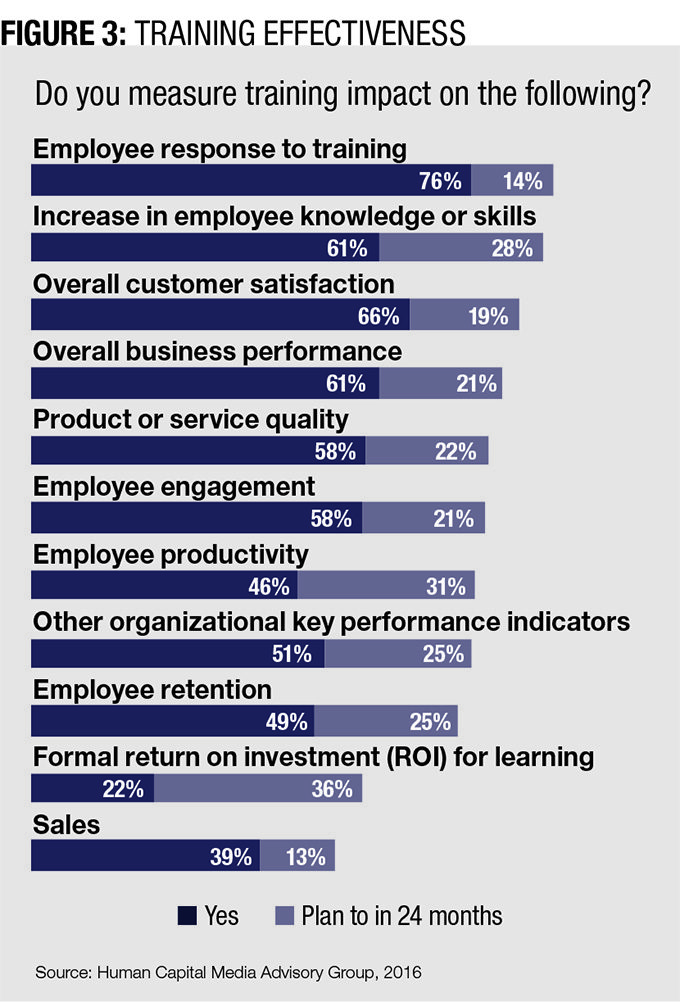

There continues to be a meaningful increase in the percentage of enterprises working to correlate learning to organizational change. Organizations are most frequently evaluating employee response to learning as well as their knowledge through quizzes and tests. Almost half of enterprises measure learning’s impact on customer satisfaction. However, there are still areas that need work. While it is improving, just over half of organizations evaluate learning’s effect on employee engagement, and less than 50 percent measure productivity and employee retention (Figure 3). Though learning programs aren’t always expected to affect quality or retention, the opportunity to use analytics to establish the impact learning has on a wider set of business outcomes is apparent.

Effective measurement can affect the broader enterprise as well as the learning organization’s bottom line. Organizations that consistently tie learning to specific changes are more likely to train. Organizations that can focus learning efforts on the most appropriate people and topics can discard less valuable training and spend less money and time on training.

Despite the obvious challenges, organizations are making progress. Though 53 percent of CLOs are satisfied to some extent, nearly 45 percent use employee performance data to help evaluate learning impact — up almost 20 percent from last year. And nearly 10 percent more CLOs report learning output measures align with corporate initiatives.

Measurement is a challenge, and it is something many learning leaders strive to do better. CLOs can take several steps to effectively demonstrate learning impact. Three of the most significant practices are:

- Set stakeholders’ expectations. Help stakeholders understand the commitment required to see assessment projects through to the end. This will minimize resistance during the measurement phase.

- Define success early. CLOs can more easily identify and benchmark key metrics for measurement before training is delivered, and make post-training results easier to evaluate and defend.

- Establish metrics at the project or business unit level. It is most effective to demonstrate learning at an initiative or business unit level. Working with smaller groups typically creates fewer obstacles to confound the measurement process.

Companies that incorporate even these simple guidelines into their assessment methodology should see a marked improvement in the success and relevance of their measurement initiatives and learning overall.

Every other month, IDC surveys Chief Learning Officer magazine’s Business Intelligence Board on an array of topics to gauge the opportunities and attitudes that make up the role of a senior learning executive. For this article, more than 171 CLOs shared their thoughts on learning measurement.

Cushing Anderson is program director for learning services at market intelligence firm IDC. Comment below, or email editor@CLOmedia.com.